A few years ago, creating a high-quality marketing video required an entire team writers, editors, animators, and hours of production time. Today, that same task can be completed in minutes with the help of AI. From generating voiceovers to producing fully edited video clips, tools like Runway, Pika, Synthesia, and HeyGen are changing the way brands create content.

And the growth is staggering. According to Wyzowl’s 2024 report, 91% of marketers now use video as a marketing tool, and AI-generated video usage has tripled in just one year. But here’s the catch: producing AI videos is easy knowing which ones actually perform well is the real challenge.

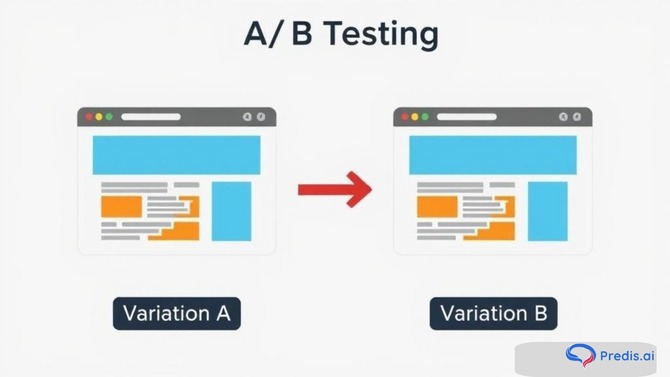

You might have two equally polished videos that look great. One opens with an upbeat track and quick cuts; the other starts slow and emotional. Which version grabs more attention? Which drives more conversions? That’s where A/B testing comes in the process of comparing two versions of content to see which performs better.

A/B testing transforms your creative guesswork into measurable insights. Whether you’re running ads, posting reels, or testing YouTube intros, learning how to A/B test AI-generated videos is one of the smartest ways to make data-driven creative decisions.

Let’s break it down step by step.

TL;DR 🖋

This is a paragraph to giveA/B testing helps you compare two AI-generated video versions to see which performs better. Start by defining your goal, create distinct but focused variants, and test them under equal conditions. Track key metrics like watch time and click-through rate, then apply your insights to future campaigns. AI tools can simplify variant creation and analysis, but the real power comes from combining data with human creativity. Start small, test often, and let your audience guide what truly works.some intro and connect to the list below

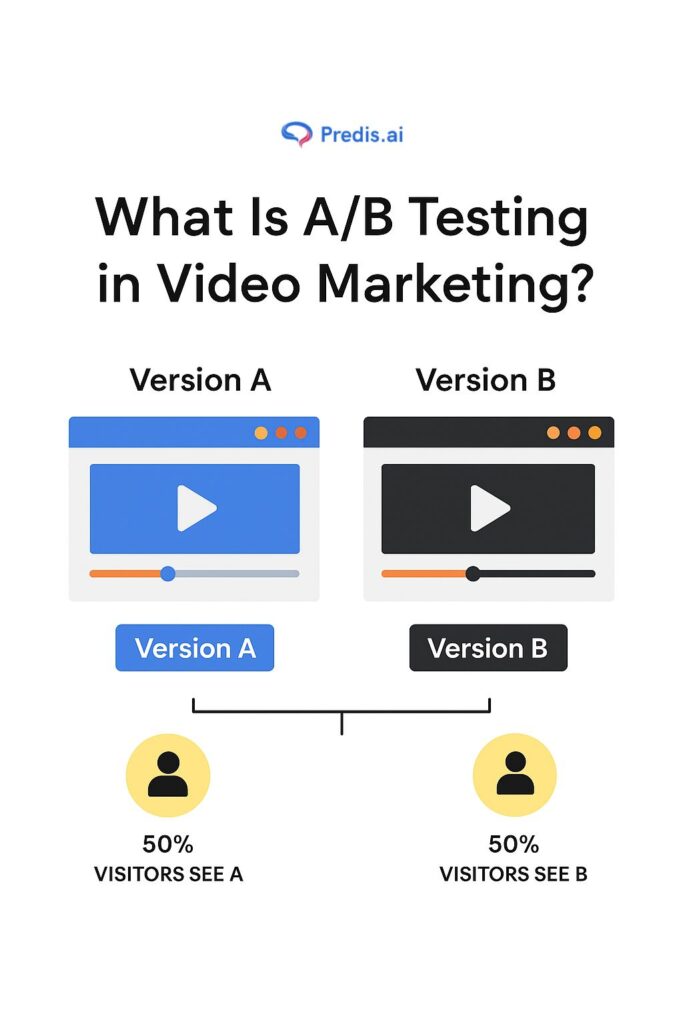

What Is A/B Testing in Video Marketing?

At its core, A/B testing (or split testing) is about comparison. You create two versions of the same video Version A and Version B each slightly different in one key aspect. Then you show both versions to similar audiences under the same conditions and see which performs better.

In video marketing, that difference could be:

- The first 5 seconds of the intro

- The background music or tone

- The voiceover style

- The CTA (call to action) placement

For example, you might test two versions of an Instagram ad one starts with a product shot, the other with a person using the product. After running both for a few days, you notice the human-focused version gets 35% higher engagement. That’s your winning creative.

With AI tools producing video variants in seconds, A/B testing has become easier than ever. Instead of spending hours re-editing clips manually, you can generate multiple alternatives and let your audience decide what works best.

Common platforms that support A/B testing for videos include:

- Meta Ads Manager (Facebook & Instagram)

- YouTube Studio (via Experiment tools)

- Google Optimize alternatives like VWO or Optimizely

- TikTok Ads Manager for creative split tests

Why A/B Testing Matters for AI-Generated Videos?

AI gives you speed and scale, but it doesn’t guarantee emotional connection. Just because a video looks polished doesn’t mean it works for your audience. A/B testing bridges that gap by revealing what your viewers actually respond to not what you think they will.

Here’s why it’s essential:

1. Data Over Assumptions

Every marketer, no matter how experienced, has creative bias. It’s human nature to favor the version that feels right to us. Maybe you prefer a certain color tone or background music, or you’re attached to the phrasing of your call-to-action. But audiences don’t always share that preference and guessing often leads to wasted ad spend.

A/B testing takes emotion out of the equation and replaces it with evidence. It lets you see, in real numbers, which video drives more clicks, watch time, or conversions. For instance, an e-commerce brand might assume that a sleek, minimalist video works best, but testing could reveal that audiences actually engage more with colorful, lifestyle-driven content.

That’s the beauty of data: it tells the truth, even when it challenges your creative instincts. When decisions are guided by measurable outcomes instead of gut feelings, your campaigns naturally get smarter over time.

2. Continuous Optimization

AI has made it incredibly easy to iterate to keep improving your content rather than guessing once and hoping for the best. With A/B testing, each experiment becomes a feedback loop. You learn something valuable every single time: what works, what doesn’t, and what might work next.

Let’s say your first test reveals that videos with on-screen text outperform those without it. You can take that learning and build your next test around text placement, font style, or animation timing. Over time, those micro-improvements stack up into massive performance gains.

This cycle of testing, learning, and refining turns your AI tool into a precision instrument rather than a creative gamble. Instead of producing dozens of random video versions, you’re making purposeful adjustments backed by insight. The result? A consistent lift in engagement and a better understanding of your audience’s evolving behavior.

3. Better ROI

Every marketing dollar counts, especially when you’re scaling campaigns. A/B testing ensures that your investment goes where it matters most toward creatives that actually deliver results.

Without testing, brands often pour money into a single video and hope it performs. But with A/B testing, you get to see which version drives more clicks, conversions, or leads before increasing your budget. It’s like trying on two different strategies in miniature before committing to the winner.

For example, a SaaS startup might run two AI-generated video ads: one focusing on emotional storytelling, the other on product features. The test could reveal that emotional storytelling gets twice the click-through rate, meaning future campaigns should lean in that direction. That’s a direct ROI boost driven by insight, not luck.

4. Understanding Audience Psychology

One of the most valuable outcomes of A/B testing isn’t just finding the “better” version it’s understanding why your audience prefers it.

When you consistently analyze what your viewers respond to, you start uncovering emotional and behavioral patterns. Maybe your audience reacts more to authenticity than perfection. Maybe they stay longer when they hear a human voice rather than an AI narration. Or perhaps they engage more with videos that make them feel inspired rather than informed.

These insights go beyond one campaign they inform your entire marketing strategy. They help you refine your brand tone, visual style, and storytelling across all platforms.

For example, a fintech brand discovered through repeated A/B tests that videos featuring human interaction a person explaining a concept or sharing a testimonial outperformed fully animated versions. That finding didn’t just improve their ads; it reshaped how they communicated on social media, email, and their website.

Step-by-Step Guide: How to A/B Test AI-Generated Videos

Let’s walk through a complete A/B testing workflow that even a beginner can follow confidently.

Step 1: Define Your Objective

Before creating any variants, decide what success looks like. What do you want to learn?

- Are you testing which intro drives more engagement?

- Are you comparing voice styles to see which improves retention?

- Are you testing calls to action for higher conversions?

Common objectives include:

- Increasing click-through rate (CTR)

- Improving watch time or view completion rate

- Boosting conversion rate or sign-ups

Be specific. “I want more views” is vague. “I want a 15% increase in average watch time” gives you direction and measurable results.

Step 2: Create Your Variants

AI video tools are your creative playground. The goal here isn’t to produce random variations it’s to isolate one variable at a time so you know what made the difference.

What to vary:

- Intro style – fast vs. slow pacing

- Voiceover tone – friendly vs. formal

- CTA wording – “Buy Now” vs. “Get Started”

- Color scheme or lighting – bright vs. cinematic

- Background music – upbeat vs. calm

If you want inspiration for creating high-performing ad variations, this guide on smart AI-powered ad variations for effective A/B testing breaks down practical examples you can try immediately.

Keep everything else identical. The moment you change too many elements, it’s impossible to know which factor influenced the outcome.

Tip: Label your files clearly (e.g., “Video_A_fast_intro.mp4” and “Video_B_slow_intro.mp4”). It saves confusion later.

Step 3: Choose Your Testing Platform

Where you run your test depends on your campaign goal.

- Social Media Platforms:

Use Instagram Reels or TikTok to test engagement metrics like views, likes, and shares. - Ad Platforms:

Meta Ads Manager or Google Ads allow controlled A/B tests with equal budgets and identical targeting. - YouTube Studio:

Use YouTube Experiments (for thumbnails and titles) or track audience retention analytics for content tests. - Landing Pages / Emails:

If your video leads to a sign-up or purchase page, integrate it with A/B tools like VWO, Unbounce, or Mailchimp.

The key is consistency both versions should reach comparable audiences under the same conditions.

Step 4: Run the Test Properly

An A/B test is only as good as its execution. Here’s what to keep in mind:

- Test Duration – Run your test long enough to gather meaningful data. 7–14 days is ideal for ads.

- Equal Conditions – Same time of day, budget, targeting, and placement.

- Avoid Cross-Contamination – Don’t test two versions with overlapping audiences in the same feed.

- Stay Objective – Don’t declare a winner after one day of results. Early data can be misleading.

If you’re testing organically (not through ads), post the two versions at different times or days but under similar contexts for example, same hashtags, similar captions, and within the same week.

Step 5: Measure and Analyze Results

Once enough data is collected, it’s time to dive into the numbers.

Key metrics to evaluate:

- Engagement rate: Likes, shares, and comments per view.

- Click-through rate (CTR): How many people clicked your link or CTA.

- Watch time / Retention: How long viewers stayed engaged.

- Conversion rate: Purchases, sign-ups, or downloads.

Visual dashboards help simplify analysis. Use Google Analytics, Meta Insights, or YouTube Analytics to identify patterns.

Ask yourself:

- Which version held attention longer?

- Which one drove more clicks?

- Did the engagement trend hold across demographics?

Keep a record of your insights over time, you’ll notice recurring themes that define your brand’s “creative DNA.”

Step 6: Learn and Apply Insights

A/B testing is not a one-time task. The real value lies in applying what you’ve learned.

- Let’s say you discover your audience prefers videos with conversational voiceovers instead of robotic narration. That’s not just one campaign insight it’s a creative direction. Use it to shape future videos, ad scripts, and tone.

- Some AI tools, like Adobe Sensei or Veed.io Insights, even allow data-driven optimizations where the AI suggests edits based on viewer behavior. But remember, data guides — creativity decides.

Every test teaches you something. Stack those learnings, and your future campaigns become smarter by design.

What to Test in Your AI-Generated Videos?

If you’re new to A/B testing, start with small, focused experiments. Here are practical areas to explore:

1. Video Hooks

The first 3–5 seconds determine if someone keeps watching. Try testing:

- A question vs. a bold statement

- Human face vs. product shot intro

2. Voice and Tone

The narrator’s style can shift perception.

- Version A: calm, professional voice

- Version B: energetic, friendly voice

3. CTA Placement

Experiment with where and how you ask viewers to take action.

- CTA at the end vs. mid-video reminder

- “Learn More” vs. “Try It Today”

4. Visual Style

Play with background color, lighting, and transitions. Small design shifts can impact watch behavior.

5. Music and Emotion

Background score influences mood. Test upbeat music versus cinematic scoring and note changes in engagement.

6. Titles and Thumbnails (for YouTube)

Thumbnails are often your first A/B test. Try contrasting visuals and track click-throughs.

Keep a testing journal or digital spreadsheet of your results. Over time, you’ll build your own personalized formula for what consistently performs well.

How AI Can Help You Optimize the Testing Process?

AI doesn’t just generate videos it can also make the entire testing and optimization workflow smoother, faster, and smarter. Think of it as your behind-the-scenes assistant, helping you uncover insights that would take weeks to find manually.

1. Automated Variant Creation

Traditionally, creating multiple versions of a video for testing meant re-editing the same footage over and over changing a line, adjusting the music, or trying a new visual cut. AI has completely changed that.

Modern tools can automatically generate hundreds of subtle video variations in minutes. Want to test a different intro line, switch the tone of voiceover, or adjust color grading to evoke a different mood? AI editors like Runway, Synthesia, or Pika Labs can handle that instantly.

This means marketers can focus on strategy instead of repetitive editing work. You can even automate subtitle styles, pacing, or call-to-action overlays to see which combination captures attention longer. The beauty is that these changes don’t require a professional editor anyone can experiment with confidence.

2. Predictive Analytics

Here’s where things get smarter. AI can analyze historical performance data to predict which variant has the highest potential before you even launch the test.

Platforms like VWO, Predis.ai, or Jasper Campaigns use machine learning to study engagement trends — everything from color psychology to emotional tone — and forecast likely outcomes. This can save you from wasting ad spend on weak creatives.

For instance, if AI notices that your audience tends to engage more with videos featuring a conversational voiceover rather than text-based narration, it will suggest optimizing future versions accordingly. Essentially, AI becomes your early-warning system for underperforming ideas.

3. Performance Analysis

Once your test is live, the real magic happens in how AI interprets results. Instead of manually combing through metrics like click-through rate, average view time, or engagement percentage, AI analytics platforms can process massive datasets in seconds and surface insights you might miss.

It can detect patterns across different demographics, time zones, and even emotional cues within the video. For example, AI might find that videos featuring smiling faces in the first three seconds lead to a 20% higher completion rate a micro insight that could redefine your creative strategy.

Tools like Google’s Performance Max insights or VidIQ AI Analytics don’t just report numbers they show why those numbers changed. That’s what makes data actionable rather than overwhelming.

4. Continuous Learning

A/B testing isn’t just a one-time activity — it’s an ongoing learning loop. Modern ad systems like Meta Ads Manager and Google Ads now use AI-driven optimization. Once they identify a clear winner between two creatives, they automatically shift delivery and budget toward that version.

Over time, the algorithms learn your audience preferences at a granular level — what visuals stop the scroll, which CTAs convert, and when engagement drops. This creates a self-improving feedback cycle where each campaign gets a little smarter than the last.

However, even the most advanced AI needs human oversight. The system can optimize based on engagement, but it doesn’t understand deeper brand values, cultural nuances, or emotional storytelling. That’s where your intuition, empathy, and creative instincts come in.

Real-World Case Studies

Theory is great, but numbers only come alive when you see how real brands apply them. The truth is, most marketers learn more from watching what others tested than from any guide or tutorial. A/B testing with AI-generated videos isn’t just a buzzword it’s already reshaping how companies understand and connect with their audiences.

From small startups tweaking ad intros to global brands optimizing emotional tone, these real-world examples show how a few smart experiments can lead to massive improvements in engagement, click-through rates, and conversions. Let’s look at how different industries are putting AI-driven testing into action and what lessons you can steal for your own campaigns.

Case Study 1: Small Business Testing Ad Intros

A local café used AI to create two Instagram ads.

- Version A: Focused on product shots (coffee, pastries).

- Version B: Featured smiling baristas greeting customers.

The second version saw a 28% higher engagement rate and a 40% lift in saves — proving that human connection beats aesthetics.

Case Study 2: Influencer Testing Voice Styles

A fitness creator tested two Reels using AI voiceovers.

- Version A: Neutral voice

- Version B: Motivational, upbeat voice

The second version improved completion rate by 33% — viewers stayed till the end.

Case Study 3: E-commerce Brand Testing CTAs

An apparel brand ran AI-generated product videos with two CTAs: “Shop Now” vs. “Discover Your Style.”

The softer CTA increased conversion by 22%, showing how subtle language shifts influence behavior.

Final Takeaway: Test, Learn, and Keep Creating

A/B testing isn’t about proving one version right or wrong it’s about learning. The best marketers treat every test as a discovery process.

AI has made it easier to create, test, and refine video content at scale. But the magic still lies in curiosity the willingness to question, test, and adapt.

Start small. Run a simple test on your next two Reels or YouTube Shorts. Measure the data. Learn from it. Repeat. Each test sharpens your instincts and strengthens your storytelling.

As one marketing expert put it, “Creativity becomes powerful when curiosity meets data.”