In today’s fast-paced digital advertising world, where every click matters, A/B testing is an important step in optimizing your display banner ads, with the potential to boost ROI by up to 30%! You heard it right! A/B testing is also known as split testing. It is an effective method for marketers through which they determine the best version of an ad by comparing them. Businesses may learn a lot about what works best with their audience by experimenting with different elements. These elements include pictures, headlines, calls to action (CTAs), and audience targeting. A/B testing of display banner ads is a game changer for businesses that are looking to increase ad engagement, save money on ads, and optimize their strategies. This approach helps you to improve your CTR and conversions without even guessing. This blog is a complete guide to A/B testing, how it works and why testing your banner ads is important. Let’s get started!

What is A/B Testing?

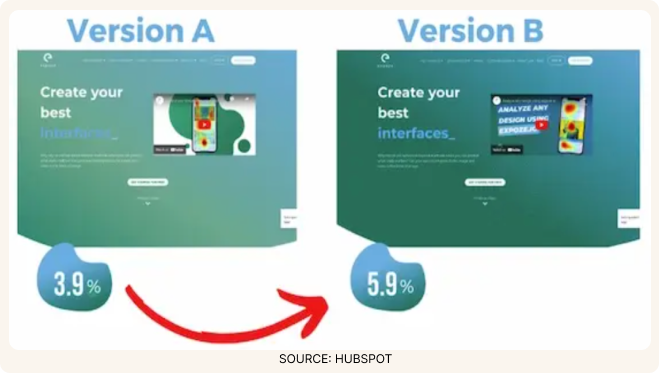

A/B testing compares two ads to see which works better. Imagine making two banner advertising for a new product. Versions A has a red background and version B has a blue background. You can show these advertisements to different target categories to find out which design gets more clicks.

Here is an example: Let’s say you’re promoting a summer sale. Version A of the banner shows a beach with the words “Save Big on Summer Sale,” whereas Version B shows a product-focused image with “Exclusive Summer Deals Await!” You can check which design resonates more with your audience by showing these designs to two different groups. Version A may engage more due to its aspirational images, while Version B may convert better by highlighting the product directly. The results of AB testing can lead you to strategize your campaigns.

How A/B Testing Works?

The process involves:

- Setting a Goal: Decide what you want to do. You can either Improve CTR or conversions and so on.

- Creating Variants: Create two or more variants of your display banner ads, changing only one element (such as headline, CTA, color scheme, etc.)

- Split Testing: Divide your audience into equal groups to view one version of the ad vs another to compare the two of them.

- Follow data collection: Conduct the test for an adequate amount of time to collect sufficient data for both of the ad variants.

- Use statistical analysis: To determine which of the variants won, you need to analyse the statistics. The data will help in creating a better campaign strategy.

Practical Applications of A/B Testing in Advertising

- Testing headlines: Headlines are the first ones the audiences see. Therefore, testing that varying one headline with another helps one decide which wording attracts more clicks. For example, “exclusive deals today” is likely to attract more attention in comparison with “explore our new range of products”.

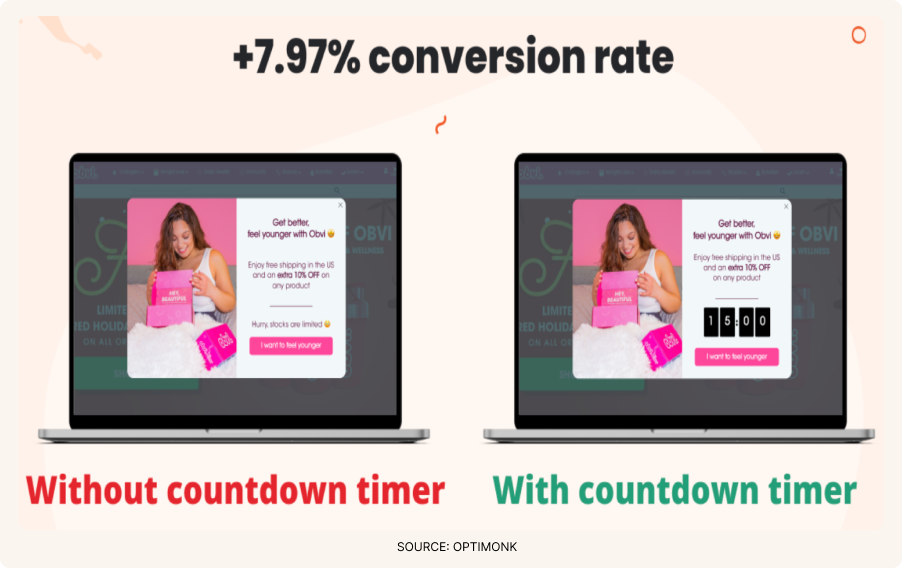

- Visual elements: This is because visuals are critical to attracting more people to your website. Testing different images, background colors, or graphics between A and B shows you which picture fulfills that objective. For instance, a brightly colored banner might draw more clicks than one with muted tones.

- CTA: Your CTA is what makes your audience take action. It might not always be the lever you need to convert but testing a “Shop Now” versus “Get Started,” or the colors of your buttons, and even their placements can help you learn what drives your audience to act.

- Ad Placement: Placements can make all the difference. You can test your ad’s placements on different websites, apps, and on different spaces on a webpage to learn which ones get the best engagement. For example, if an advertisement is placed above-the-fold, it usually gets more attention than, if it were below the page.

Why A/B Testing is Important for Display Banner Ads?

The first time potential customers ever see your brand can often be in the form of display banner ads. A/B testing is a fundamental strategy in ensuring that you get a good impression on this interaction. Here’s why:

- Minimizing Assumptions: Marketers are forced to make educated guesses or follow industry trends without A/B testing to validate their choices, even though those trends might not be suitable for their audience. A/B testing takes the guesswork out of the UX design process with actionable insights based on user behavior.

- Maximizing ROI: Understanding which pieces perform best in your display ads, whether they be visuals, copy, or CTAs, allows for a much more economical use of your budget, with every dollar spent making the most possible impact.

- User Experience Improvement: Relevant ads make for an interesting and smooth flow for the audience. For instance, by using language or imagery that resonates with your target demographic can dramatically enhance your click-through and conversion rates.

- Staying Up-to-date with Algorithm Changes: Search engine and ad platform algorithms are constantly changing. A/B testing keeps you on top of these changes with frequent content changes and optimizations ─ adapting ads to the new normal.

- Familiarity with Audience: Different audiences react differently to ads. A/B testing reveals valuable insights on different demographic segments, allowing for more tailored and impactful marketing approaches.

- Building Credibility and Trust: As well-optimized ads are less likely to sound intrusive or irrelevant, they will make your brand look more reputable and professional for the end consumer.

Create stunning banners effortlessly using Predis.ai's AI Banner Maker - boost your ad performance and conversions.

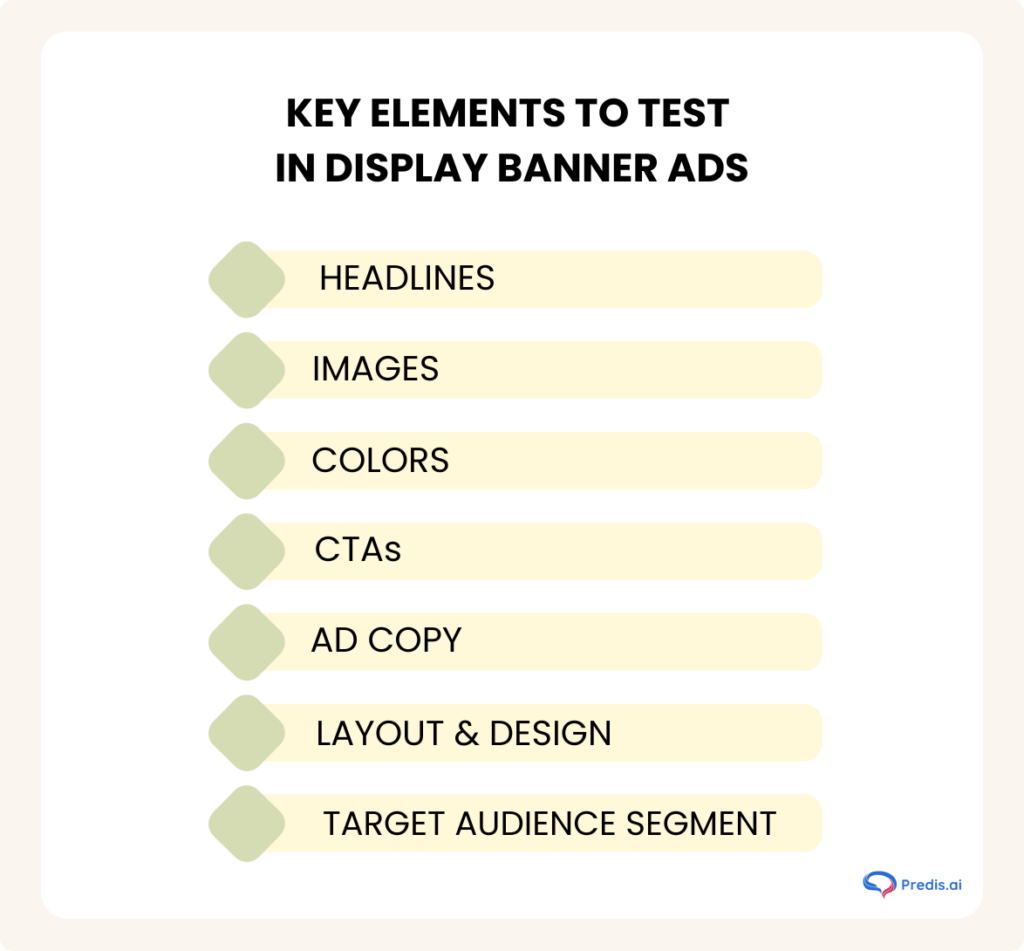

Display Banner Ads Elements to Test

Below are the key elements of display banner ads that should be A/B tested:

- Headlines: When crafting headlines, you should consider using different wording, length, and tone for different versions.

- Images: You can use various types of visuals, such as photos, illustrations, or icons to engage with the user.

- Colors: Play around with colors a lot, as various color schemes will probably affect the user emotions and actions in a lot of different ways.

- CTAs: While making CTAs, you should try out multiple texts, fonts, placement, sizes, and colors.

- Ad copy: You can test different styles and lengths to find what works best for an ad copy.

- Layout and design: You can experiment with various arrangements of elements to create the most effective layout and design for your display banner ad.

- Target Audience Segments: You can compare performance of your ad across different demographics, locations, or devices to ensure that you are targeting the right kind of audience.

How to Conduct an Effective A/B Test for Display Banner Ads?

Set Clear Objectives

Set goals for the test such as whether the aim is to improve CTR as well as rate of conversion or engagement. Clear objectives ensure that you don’t deviate from your marketing aim and that the test provides results that are easy to measure.

Create Hypotheses

Formulate concrete assumptions which are capable of being tested and which will serve to boost the performance of the advertisement. For example, “We expect to see a 10% increase in conversions if the color of the CTA button is changed from green to red.” A strong hypothesis provides a sense of direction and focus to your test.

Build Variants

Create multiple versions of your ad with one main difference in each. This may be the headline, image or a CTA button. Changing one variable at a time allows you to pinpoint the time when performance was boosted to a specific variation.

Select Metrics

Determine the key performance indicators (KPIs) to be assessed. These might cover primary KPIs such as CTR, Conversion, as well as Secondary KPIs such as Time spending on the landing page and Bounce Rate.

Run the Test

Employ an ad management tool such as Google Ads or Meta Ads Manager to ensure the audience is spilt equally amongst them and can see different variants. Random allocation reduces bias and enable fair comparison.

Monitor Duration

Don’t put a limit on time while conducting a test. Ending a test too soon may lead to inaccurate conclusions. The volume of traffic and the confidence level both determine the desired functional period.

Analyze Results

Use data analytic tools such as heatmaps, or Google Analytics, or any A/B testing software to analyze results. Examine the performance metrics between variants in order to identify the winning trend.

Implement Changes

The winning variant, once acknowledged, can be used in standard ad campaigns. Use this to further improve future tests relevant to the ads.

Case Studies of Successful A/B Testing

Case Study 1: Bannersnack: Boosted Sign-Ups with A/B Testing

Bannersnack, which is known for its online tools for designing ads, wanted to improve the overall experience on their advertising page and increase sign-up rates. However, deciding on the first step proved rather complicated. So as to solve this issue, the experts at Bannersnack used the Hotjar click heatmap tool that helps in assessing user patterns and behaviors. These heatmaps highlighted the places with the highest amount of user’s attention, while also revealing the areas that were completely ignored by visitors. This information assisted Bannersnack in making an educated guess: the addition of large and highly contrasting CTA buttons would greatly enhance the conversion rate.

- As part of this educated guess, they designed an in-house adopted method.

- One of the app teams, for example, carried out an A/B test against the original design and the one including the modified CTA button.

- The change in results was evident: the redesigned layout resulted in a CTR 25% greater than the previous design.

After each modification, Bannersnack’s tracking tools would further improve the design by examining the heat maps to see what elements still required changes. Their ability to reach the transformational markers as envisioned proved the role of strategic design in achieving progressive change.

These are the Key Insights:

- Contributors: Look at why users want to come to your page.

- Hurdles: What are the factors stopping people from trying your site or converting.

- Chains: Provide reasons to users as to why they can take certain actions.

Case Study 2: Turum-Burum: Optimized Checkout Flow

A digital UX design agency, Turum-Burum had collaborated with Ukrainian e-commerce shoe retailer Intertop to improve their checkout conversions. They found that 48.6% of users abandoned the checkout process because they were unable to complete the form, when they conducted exit-intent surveys during their UX analysis. Because of thee findings, they formed a hypotheses and formed their A/B testing strategy accordingly.

- It included important optimizations such as minimizing the form fields, organizing the page into distinct sections, and implementing an autofill feature to expedite the checkout process.

- They used session replay tools and heatmaps to monitor their user interactions and uncovered issues such as rage (repeated) clicks and confusing navigation paths.

Below were the results after the modifications:

- Conversion rate was increased by 54.68%

- Average revenue per user (ARPU) also grew by 11.46%

- Checkout bounce rate was decreased by 13.35%

Common Mistakes to Avoid in A/B Testing

- If you are testing multiple variables at once, it can make it hard to see what caused a performance to change. You need to stick to one variable per test for clear results.

- If you are ending tests too early it may lead to unreliable data. Let the tests run long enough to gather meaningful insights based on your traffic and audience size.

- Small or unrepresentative samples can interfere with the results. Make sure you are using a large enough audience for accurate results.

- Changing a test mid-run also affects the accuracy of the data. Avoid adjustments during the process and always set up the test fully before starting.

- Documenting every step and outcome in a test management platform ensures clarity, preserves reliable test records, and supports data-driven decisions for future campaigns.

- CTR is useful but doesn’t tell the whole story. Include other metrics like conversions, ROI, and bounce rates for a complete understanding of what exactly is happening.

- Ignoring demographic, device, or location data can hide many important opportunities. Hence, always analyze segmented data for better targeting.

- Always keep detailed records of findings and takeaways for future campaign strategies and references.

- Focusing only on the winning variant can lead to missing the valuable insights. Review all data to understand what worked and how to improve further.

Conclusion

A/B testing is an essential tool for marketers who are looking to improve their display banner ads and increase the impact of their advertising campaigns. This testing strategy improves ad performance and also assures that resources are allocated more efficiently. As the testing mainly focuses on real time user data and hence allows decisions based on data. It also builds a stronger relationship with your audience by identifying preferences and personalizing marketing to their specific requirements. By avoiding common mistakes and documenting their results, businesses can maintain a competitive edge. This approach helps ensure long-term success in their digital marketing efforts.

FAQs

It depends on your audience size and traffic volume. But you can run the A/B tests for at least 2 weeks.

You can start with testing two variants (A and B) to ensure clear and actionable results.

Yes, A/B testing can be used for remarketing campaign ads.

You can use tools such as Google Optimize, Optimizely, Adobe Target. Here are other tools that you can use too.

Ensure a large enough sample size, test one variable at a time, and avoid ending tests prematurely. This way you can ensure that the A/B test results are accurate.

Related Content,

Best Banner Ad Examples for Inspiration