If you are chronically online like me, then you will have stumbled across AI-generated videos. Some of you might have even experimented with it, created videos, and shared them on your social media channels. But have you ever wondered if the content actually belongs to you or not?

Is the content you are putting out copyrighted under your name, or is it free for anyone to repurpose and reuse? That is exactly what we are going to be addressing in the blog. The copyright issues, the ethical gray zones, and everything in between will be dissected in detail in the upcoming paragraphs.

What are deepfakes and AI-generated videos?

AI-generated videos use ML and advanced language models to synthesize videos, images, and speech with human likeness. Deepfakes, on the other hand, are used to swap faces, voices, and gestures in real-life videos.

This content was used to make visual effects easy and in entertainment. But this technology has transcended from this niche to marketing, social media, education, and so on. It has made content generation easy and even helped small businesses make content with a smaller budget.

But unfortunately, deepfake and AI-generated content are used to spread misinformation, which has given them a bad reputation and copyright issues. This is why the ethical and confidentiality issues behind AI content have to be understood so that it can be used responsibly.

Who owns an AI-generated media?

When an artist or creator paints or makes a video, the content is generally attributed to the person making the art. In such a case, the copyrighted content and the person it belongs to are clearly drawn out.

But the thing with AI-generated media and deepfake is that there is no clear creator and owner, which means copyright limits are blurry as well. AI is trained on large quantities of data, based on which the final product is drawn.

The Intellectual property laws are still pretty ambiguous on the ability to copyright AI-generated content and thus leave it unprotected.

An additional tip is that you can document and save your creative contributions so as to assert your ownership over the creation.

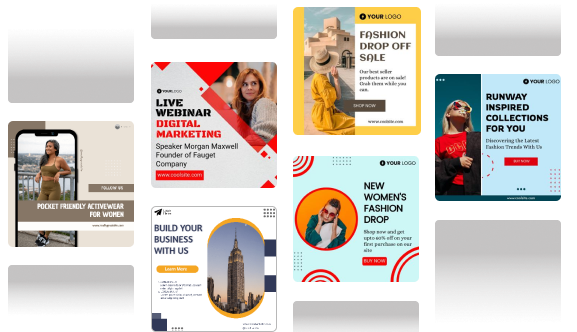

Risks associated with AI-generated and Deepfake content

So, what are some of the risks associated with AI-generated content and Deepfakes that you need to know about? Here are some of them:

1. Copyright issues

Since AI derives its output by learning from countless online materials, including copyrighted content, the content cannot be completely categorised as original. In fact, the US copyright office guidance states that works without human authorship cannot be copyrighted.

If the output generated by AI accidentally looks similar to other copyrighted content, then it will trigger legal complications.

2. Identity theft

When an AI-generated persona resembles a real-life person, it may lead to harmful impersonation. Additionally, there is an explicit requirement that you should not use someone’s likeness without their consent. Neglecting this can often result in serious issues, blackmail, and reputational damage.

3. Misinformation and manipulation

Deepfakes can create highly realistic content that spreads false information, blurring the line between fact and fiction. When users cannot distinguish between false and true information, it can have serious consequences. This might influence politics, distort people’s opinions, and even endanger communities.

4. Discrimination

If the AI gets trained on biased data, then the output that it generates will be biased as well. This unfair treatment can generate results that do not depict the whole picture. This is why you should always cross-reference the content generated by AI to make sure discriminatory messages are not shown.

5. Accountability

Since the content generated by AI does not have a clear owner, there is no one to be held accountable when it spreads misinformation. Keeping someone responsible and avoiding this mishap from happening is next to impossible, which is why accountability is an ethical concern when it comes to AI-generated content.

6. Carbon footprint

When using AI, a large amount of power and water resources are required to keep the system up and running, which naturally leads to environmental issues. This large-scale impact on the environment is also an ethical concern that needs to be addressed.

Addressing Ethical Risks: Building a Responsible AI Future

While copyright and defamation are major concerns, the bigger question is: how do we use AI or deepfake responsibly? It’s not just a legal issue—it’s a moral one. Building an ethical AI future requires effort from everyone involved: creators, tech companies, policymakers, and even everyday users.

Here’s how we can start making that shift.

1. Transparency and Disclosure

If you’re using AI to create content, be open about it. Labeling videos, images, or text as AI-generated gives audiences context and helps them decide how much to trust what they see. It’s a simple yet powerful step toward rebuilding credibility in an era of synthetic media.

2. Regulation and Legal Frameworks

Governments and international bodies must modernize existing laws to address AI’s impact. This includes clarifying copyright ownership for AI works, setting liability for harmful or misleading AI content, and ensuring stronger privacy protections. The law shouldn’t stifle innovation—but it does need to safeguard the public.

3. Bias Mitigation Strategies

AI reflects the data it’s trained on, which means it can also replicate human bias. Developers can reduce this by auditing datasets, testing models for fairness, and diversifying training material. These steps may sound technical, but they’re critical for ensuring AI doesn’t unintentionally discriminate or stereotype.

4. Education and Media Literacy

One of the best defenses against misinformation is awareness. Teaching people how to identify deepfakes, fact-check content, and think critically about online media can prevent manipulation. Schools, organizations, and platforms all have a role to play in building media literacy.

5. Sustainable AI Development

Beyond ethics and law, there’s also the question of environmental responsibility. AI systems consume a lot of energy, especially during training. Moving toward energy-efficient models and greener computing practices can make AI innovation more sustainable in the long run.

How Businesses Can Stay Safe

For brands using AI tools to create content, the smartest move is to stay proactive. Here are some quick tips:

- Always read the licensing agreements of AI tools before use.

- Avoid uploading proprietary or copyrighted material as input data.

- Add disclaimers or labels if your content is AI-assisted.

- Use AI ethically—don’t manipulate, mislead, or impersonate real people.

- Stay updated on evolving AI and copyright laws in your region.

- Taking these precautions protects both your brand’s reputation and your audience’s trust.

Final thoughts

AI-generated content and deepfake have made content creation accessible to the masses, but the copyright issue is still a major concern. With this ability comes the responsibility to make content ethically and not use it to spread misinformation.

The legal community is working towards reaching a place where AI-generated content can be regulated properly. Until then, it is every individual’s responsibility to prioritize honesty and make ethical use of AI. So sign up for this tool and use AI responsibly!

FAQ:

Not always, so far, AI-generated videos are not copyright-protected in many regions because they are fully generated by AI. Only content created by humans can be protected with the help of copyright laws.

Yes, you can get sued for using someone’s image without their consent because it violates privacy and causes defamation if the content is misleading or derogatory.

Some subtle signs like unnatural lighting, glitches, and mismatched lip movements can help identify AI-generated content. Some social media platforms are even working on adding AI labels to the content to ensure people do not get misled.